The collection of Use Cases in the DEEP-HybridDataCloud project have been chosen from several communities and different scientific disciplines. They provide a relevant sample of demanding requirements for the DEEP Learning as a Service Platform.

Lattice QCD for massive data analysis

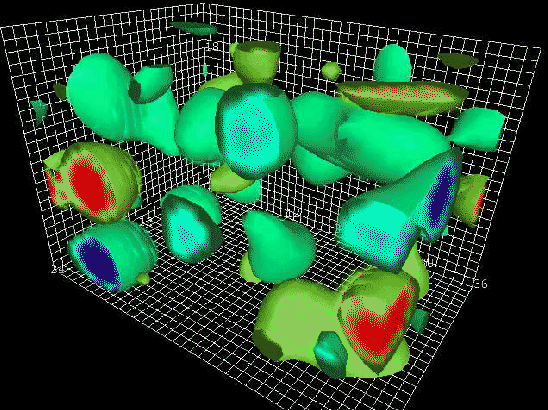

Quantum Chromodynamics (QCD) is the theory that describes the interaction responsible for the confinement of quarks inside hadrons, the so-called strong interaction. Investigating the properties of QCD requires different techniques depending on the scale of energy we are interested in. In particular at low energies, at the scale of the proton mass where quark confinement takes place, we must rely on non-perturbative methods, notably Lattice QCD simulations. Competitive Lattice QCD simulations spread nowadays over thousands of cores in HPC systems with low latency interconnects. The amount of data that needs to be analyzed in a medium-size project is on the order of the Terabyte. The purpose of this Use Case is to serve as pilot for designing such a data configuration tool to have general applicability for similar usage scenarios in other scientific areas.

Plant Classification with Deep Learning

In this era of big data where everybody is equipped with a smartphone device, collaborative citizen science platforms have sprung enabling users to easily share easily their observations. We intend to collect those freely available observations to build Deep Learning tools to around them. This Use Case describes a tool to automatically identify plant species from images using Deep Learning. This can be very helpful to automatically monitor biodiversity at a large-scale and therefore relieving scientist from the tedious task of having to hand-label images.

In addition this tool can be easily retrained to perform image classification on a different datasets by a user without expert (Machine Learning) knowledge.

Deep Learning application for monitoring through satellite imagery

With the latest missions launched by ESA, such as Sentinel, equipped with the latest technologies in multispectral sensors, we face an unprecedented amount of data with spatial and temporal resolutions never before reached. Exploring the potential of this data with state-of-the-art AI techniques like deep learning, could potentially change the way we think about and protect our planet’s resources.

The applications of the Machine Learning techniques range from remote object detection, terrain segmentation to meteorological prediction. Our use case will implement one of these applications to demonstrate the potential of combining satellite imagery and Machine Learning techniques in a Cloud Infrastructure.

Massive Online DataStreams

Security issue, intrusion detection, and anomaly detection are challenging for data centers when large IT infrastructures and devices produce a huge amount of data streaming continuously and dynamically. Our challenge solution aims scalable edge technologies to support extensive data analysis and modelling. The dynamic data analytic supporting Machine and Deep Learning (ML/DL) modelling appreciate methods and tools for large-scale data mining are required to transform raw data into useful information. The information collected at the data center level (logs, intrusion detection system alerts, etc.) will be processed using ML/DL techniques to identify anomalies about resource usage and network communication, among other issues, such as situational awareness that implies anomaly functional situations.

Diabetic Retinopathy detection

This use case is concerned with the classification of biomedical images (of the retina) into five disease categories or stages (from healthy to severe) using a deep learning approach. Retinopathy is a fast-growing cause of blindness worldwide, over 400 million people at risk from diabetic retinopathy alone. The disease can be successfully treated if it is detected early. Colour fundus retinal photography uses a fundus camera (a specialized low power microscope with an attached camera) to record color images of the condition of the interior surface of the eye, in order to document the presence of disorders and monitor their change over time. Specialized medical experts interpret such images and are able to detect the presence and stage of retinal eye disease such as diabetic retinopathy. However, due to a lack of suitably qualified medical specialists in many parts of the world a comprehensive detection and treatment of the disease is difficult. This use case focuses on a deep learning approach to automated classification of retinopathy based on color fundus retinal photography images.